Discover how legal AI like Pre/Dicta revolutionizes litigation predictions with advanced legal analytics and forecasts.

Bim Dave is a seasoned expert in legal technology, boasting over 20 years of experience in the industry. His extensive background spans technical support, team management, and global technical services delivery. Currently serving as EVP at Helm360, Bim has a proven track record of driving quality and customer satisfaction, making him an ideal voice on the implications of legal AI and analytics in the legal profession.

Bim Dave is a seasoned expert in legal technology, boasting over 20 years of experience in the industry. His extensive background spans technical support, team management, and global technical services delivery. Currently serving as EVP at Helm360, Bim has a proven track record of driving quality and customer satisfaction, making him an ideal voice on the implications of legal AI and analytics in the legal profession.

As the EVP at Helm360, Bim oversees products and services including AI chatbots and data discovery tools. His previous roles at Thomson Reuters further cement his expertise, where he managed global technical services and led innovative projects in legal software. Bim’s passion for customer service and deep technical knowledge make him a leading authority on legal AI and its transformative potential.

Podcast Summary

Dan Rabinowitz, co-founder of Predicta, discusses the transformative impact of AI-powered litigation prediction tools on the legal industry. Predicta leverages behavioral analytics and extensive datasets to provide 85% accuracy in predicting motion-to-dismiss outcomes, focusing on judicial behavior rather than case facts. This innovative approach empowers lawyers to make informed decisions about motion strategy, settlement timing, and litigation costs. By offering insights into judge-specific patterns and case timelines, Predicta helps law firms optimize litigation strategies and resource allocation, leading to improved client outcomes.

Rabinowitz highlights the benefits of AI in addressing inefficiencies and opacity in the legal field. He emphasizes Predicta’s role in budgeting, cost forecasting, and managing litigation risks. With its intuitive interface and ability to account for judicial and case-specific variables, Predicta is reshaping how law firms approach case strategy, making AI an indispensable tool in litigation.

Listen Here: https://helm360.com/tlh-ep-31-how-ai-in-litigation-will-transform-the-legal-industry/

Introduction and Guest Background 0:03

Bim Dave: Dan, hello Legal Helm listeners. Today, I am delighted to be speaking with Dan Rabinowitz, CEO and co-founder of Pre/Dicta, an AI-powered litigation prediction software.

- Introduction of Dan Rabinowitz, highlighting his roles at the US Department of Justice, a Washington, DC-based data science company, and WellPoint Military Care.

- Brief overview of Pre/Dicta and its capabilities in predicting case outcomes and motions to dismiss.

Dan’s Journey to Pre/Dicta and Interest in AI for Legal Prediction 1:16

Dan Rabinowitz: I began my career as an associate at a large law firm and was tasked with predicting a judge’s ruling on a motion to dismiss in a product liability case.

- Challenges faced in using traditional methods to predict outcomes.

- Realization of the lack of transparency in the judicial process and the potential for using AI and data science.

Concept of Using AI and Behavioral Analytics in Legal Predictions 5:56

Dan Rabinowitz: When it comes to judicial decisions, our analysis showed that patterns can be discerned by AI, not by humans.

- Introduction to the idea of using behavioral analytics to understand judicial behavior.

- Comparison to predictive capabilities of Google or Netflix.

Data Points and Accuracy of AI Predictions 8:28

Bim Dave: Fantastic, that’s really, really interesting. Are you able to elaborate on some of the other data points?

- Explanation of biographical characteristics and proprietary data used in predictions.

- Emphasis on 85% accuracy in predicting motions to dismiss without considering the facts of the law.

Impact on Litigation Strategies 9:56

Bim Dave: Got it, excellent. How are you seeing lawyers that are currently using Pre/Dicta’s insights inform their litigation strategy today?

- Discussion on how Pre/Dicta informs litigation strategies from both plaintiff and defense perspectives.

- Examples of strategic decisions influenced by AI predictions, such as motions to dismiss and class certification.

Strategic Benefits and Cost Management 15:06

Bim Dave: The cost management piece must be a real differentiator.

- Discussion on how Pre/Dicta helps in forecasting costs and managing litigation budgets.

- Explanation of how attorneys can use AI predictions to make informed decisions on settlement offers and litigation strategies.

AI Bias and Ensuring Fairness 21:53

Bim Dave: We can’t talk about AI without talking about AI bias in legal predictions.

- Explanation of how Pre/Dicta addresses AI bias by using massive data sets and independent testing.

- Importance of backtesting and forward testing for consistent accuracy.

Overcoming Resistance to AI in Law Firms 24:23

Bim Dave: Are you seeing any resistance to getting into a law firm and presenting a solution that is AI-driven?

- Addressing the conservative nature of law firms and skepticism towards AI.

- Explanation of how Pre/Dicta’s accuracy and user-friendly interface help overcome resistance.

Practical Integration and Usability of Pre/Dicta 29:35

Bim Dave: Can you talk us through what it takes to actually get started on the platform and how easy it is to adopt for a law firm?

- Details on the cloud-based nature of Pre/Dicta and the intuitive design for ease of use.

- Mention of quick training time and customizable report exports.

Future of AI in Law Firms Beyond Litigation Prediction 31:24

Bim Dave: Looking ahead, where do you see AI playing a role in law firms beyond litigation and litigation prediction?

- Dan discusses the potential for AI to create efficiencies in repeatable tasks.

- Insights on entering new areas of law that were previously limited by human capacity.

TLH EP31 How AI in litigation …m the legal industry – Helm360

Mon, Jul 22, 2024 12:21PM • 36:53

SUMMARY KEYWORDS

attorneys, case, judge, ai, motion, prediction, dismiss, summary judgment, plaintiff, law firm, firm, settlement, lawyer, forecast, client, relates, provide, inevitably, litigation, minority report

SPEAKERS

Dan Rabinowitz, Bim Dave

Bim Dave 00:03

Dan, hello legalhelm. Listeners today, I am delighted to be speaking with Dan Rabinowitz, CEO and co founder of predictor, an AI powered litigation prediction software for the US Department of Justice, serving as general counsel for a Washington, DC based data science company, and also playing the roles of Associate General Counsel, Chief Privacy Officer and director of fraud analytics for WellPoint military care. He went on to co found predictor, an AI powered litigation prediction software that uses forward thinking data science around judges to predict case outcomes motions to dismiss, and so much more in today’s podcast, I’m going to be talking to Dan about how AI and litigation will transform the illegal industry. Dan, hello and welcome to the show. Hi there. Thank you for having me So, Dan, it would be great to kind of get to know you a little bit more, and for our audience to know you a little bit more. So your background is really interesting because it kind of bridges law and data science together. I’m really interested to learn about your journey that led you to Predicta and what initially sparked your interest in AI for legal prediction.

Dan Rabinowitz 01:16

Yeah. So I actually began my career as an associate at a large law firm, and I still remember vividly, I was tasked with trying to, if you will, predict or forecast a particular judge’s ruling on a motion to dismiss in a product liability case. And the direction was to pull all of the cases that the judge had written an opinion about as it relates to motions to dismiss. And needless to say, there are only, you know, maybe, maybe a dozen, and most, if not all, were irrelevant to products cases. Of course, though, you know, as an associate, you’re tasked by the partner who presumably has had the conversation with the with the client. So, you know, go ahead and draft the memo, but at the same time, you know, it struck me that there must be a better way to understand the judge. And of course, you know, prior to going through that exercise, we had done extensive research and briefing on the motion to dismiss itself. So you know, there was this critical component, the judge that was ultimately going to read and hear the arguments, and yet we were left at a huge disadvantage in terms of determining or forecasting the outcome of the particular judge. And you know, that’s always the case. And yet it’s this black box everything else you know, as it relates to litigation, there’s a lot of transparency, but here there was almost no transparency. Now, if you will, fast forward to, you know, when I’m at the data analytics company, and then even before that, I’m exposed to other technologies in the legal space, and you just get the sense at some point that the legal space is significantly lagging behind the commercial space. So that means that it isn’t that you need to create something specific for legal. Of course, whatever technology you have, it has to be tailored for legal. But you know, you’re sitting in 2010, 2015, and you realize, like the advances in technology that we’ve had in the last 10 to 15 years, and yet when it comes to legal, you know, the best we could say at the time was, is that we slightly improved ediscovery. But you know, none of the transformation that we saw in the general commercial space really seemed to bleed into legal. And you know, when I was exposed to the idea that you can really understand people using any number of factors beyond, if you will, the obvious ones. So it struck me that the same might be applied for judges. So rather than looking at simply the exercise that I went through the limited number of opinions, there has to be a way for us to better understand judicial behavior, and if you will, a model for this might be Google or Netflix or any of the services that we use that seem to have this uncanny ability to predict or forecast what we want to watch next, or what we Want to buy next, or where we want to travel next. And yet, none of that was being deployed in the legal space. Now, at the same time, there was this idea that you could use some form of statistics about the judge in order to glean what they’ll do in the future, but that, if you will, suffers from the same, if not. In some ways worse than that exercise of reading the opinions, because those statistics that are available now or back then even are limited in the sense that all they do is tally and sum the judges total number of for example, grants. And denials. So if a judge, keep it simple, has 100 different decisions. Maybe they only wrote opinions on five of those, but they have 100 decisions, and they grant 65 out of that 100. That, of course, tells you nothing about your particular case, because if all 100 of those are totally irrelevant, you know, they involve smaller cases or just totally different types of attorneys or arguments, then those 100 not only are not helpful, but if you do decide to use those from a statistical perspective, that can actually lead you down the wrong path. So understanding that the limitations of the current UL or the legal technology at the time, plus understanding what, what, what the potential was, led me to the idea that we could use behavioral analytics to analyze judges to actually provide meaningful and trustworthy forecasts and predictions. Fantastic.

Bim Dave 05:56

That’s really, really interesting. Are you able to kind of elaborate on some of the other data points that you would consider that might influence predictions,

Dan Rabinowitz 06:05

sure. So when it comes to judicial decisions, our analysis showed that the patterns that we can discern, and by we, I don’t mean me or anyone on my team, or any real human it’s the AI, if you will, that’s able to go through massive, massive data sets and find those non obvious patterns. Those patterns are reflected in the attorneys and the parties and the interrelationship of that with the judicial biographical profile. So if we can look at, if you will, different attorney types, and we can classify them, and we can say, Okay, this attorney worked for a firm that’s based in New York that has, you know, 1000 attorneys, that has a certain number of, you know, certain amount of revenue, against another firm that is, you know, based in the Midwest, has 10 attorneys, so on and so forth. And then a similar exercise with the parties. So there, there are patterns that you can then determine, and then what you have to do is link those patterns with particular biographical characteristics. So for example, to the you know, to to get to your question specifically of what biographical characteristics that we’re looking at, of course, we have dozens and dozens, and as you would imagine, a significant portion of those, or a substantial portion of those are proprietary. But the but the more obvious ones would be, you know, the attorneys experience so that, or excuse me, or the judges experience, so that would be, you know, where they went to law school, where they practiced, who appointed them? When? When were they appointed? Were they at state you know, were they a state court judge before being elevated to a federal judge, as well as, for example, income and net worth? Because, you know, again, to go back to the Google or the Netflix, example, they’re not simply looking at what movies or TV shows you watched in the past 10 days or the past year. They also understand where we live, and they understand who our neighbors are, and they understand our educational background and so on and so forth, and that’s the way that they’re able to provide precise forecasts. So it’s the same when it comes to our exercise in the judicial characteristics. We want to look at the ones that are more linked to law, but then many others that are not obviously, obviously linked to the law.

Bim Dave 08:28

Fantastic. And what, what does that lead to in terms of accuracy? Like, what kind of accuracy are you seeing in terms of the Predict prediction outcomes?

Dan Rabinowitz 08:36

So our predictive outcomes in terms of motions to dismiss, is around is 85% accurate, and that is, it’s important to emphasize that is without regard to the facts of the law, because what we’re doing is separate and apart from what an attorney would do as it relates to research, as it relates to legal research, as it relates to discovery, fact finding and the like, we’re wholly focused on the judge. So when we’re focused on the judge, we assume that the attorney has done their job, if you will. And we want to now understand that argument or that brief, or, you know, that motion. How is that sheltered through that human being? And therefore we do not have to look at the briefs. We don’t have to look at the complaint. Instead, when I say that we’re 85% accurate, the only information that the user inputs is the case number. There’s no further input on the on the user’s perspective, and at that point in time, while we are running a real time analysis that does not include us analyzing the brief or or any of the particular legal arguments.

Bim Dave 09:45

Got it excellent, excellent. So how are you seeing lawyers that are currently using predictors insights inform their litigation strategy today?

Dan Rabinowitz 09:56

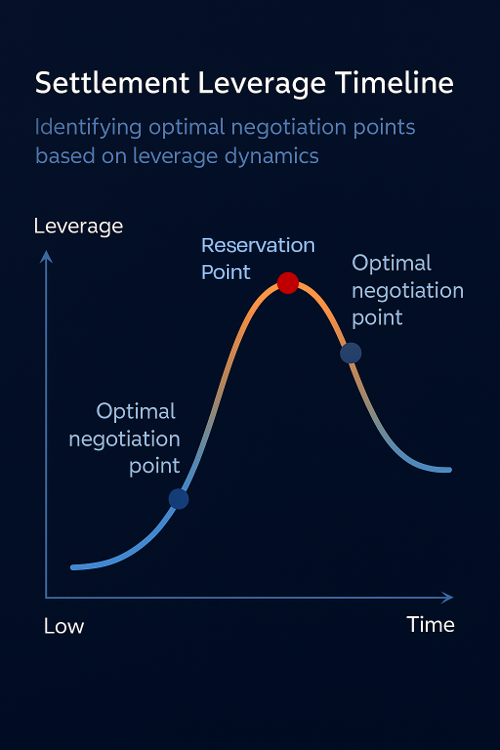

Yeah. So there’s a number of ways that our. Insights really influence strategy in a very meaningful way. So let’s just sort of work our way through a case. So at the motion to dismiss stage, we can provide that prediction even before filing. So from a plaintiff’s perspective, we can make an assessment either prior to filing or after the judge is assigned to tell them whether or not this case has a high probability of passing, go right, passing the motion to dismiss, getting to discovery, where, of course, from a plaintiff’s perspective, that’s key to get a settlement or move beyond, Now, beyond the motion. And then on the flip side for defense counsel, it’s also, although many defense counsel always assume it makes sense to file a motion to dismiss, and there is some legitimacy in that position, right? You file a motion to dismiss, even if you’ve hired a big firm, it might cost you 60 $100,000 but at the end of the day, if that motion to dismiss is granted, that could save you 10s of millions of dollars. But there is also a strategic advantage, if you know the motion to dismiss will not be granted. That is the motion to dismiss will be denied. In order to file that motion to dismiss, you have to disclose all the perceived limitations and faults in plaintiff’s complaint. So if there is no actual benefit. In other words, if we can say with 85% certainty that this motion will be denied, you have to think hard. Should I disclose that now and provide plaintiff with a roadmap of the areas that they need are correct, or where we’re obviously going to be hitting them in discovery, at the depositions and and and throughout discovery? Or do I hold that back? Do I move to an answer? Maybe I actually are very aggressive now, because I want to get to discovery as quickly as possible. So unlike maybe a traditional piece of litigation where you have a big firm that delays, or I don’t want to, you know, not necessarily in a bad way, but you know that they string things out, a large firm also has the benefit of nearly unlimited resources. So if they want to push to discovery very quickly, they can do that, and then they put plaintiff on the back foot, plus they have this intelligence, and they’ve, you know that that that they’re holding back about where, where they’re going to attack now that then moves into another, I think, two areas, two motion types, that are also really important to have specific insight into how the judge will rule. The first that we provide is class certification. So class certification, of course, is game changing, right? If a class is certified, the entire tenor of the case changes, you know. It shifts from, you know, from defendant maybe getting out with a small settlement to there’s going to be a massive settlement here. Plaintiffs, lawyers are rushing in to sign up as many plaintiffs as they can. Of course, if you’re going to win that class certification motion right and that’s going to be denied from defense perspective, that’s also a huge factor. Now they can either start settling cases or actually litigating cases to trial, depending on on how they see that. Now let’s take the defense side. So you’re involved in a case, and plaintiff files a class certification motion, and at the same time, plaintiff comes to you and says, I’m willing to settle this case today for 25 million. So is that a good settlement offer or not? Well, if they’re going to win the class certification, that might be a terrific settlement offer If, however, they’re going to lose that class certification, you may have just overspent by whatever factor that is. So if you use Predicta and you use our forecast that says the class motion is likely to fail, so then you push back on plaintiffs offer, maybe you knock it down even further right now, on the other hand, if you don’t have that certainty, and presumably your your client is to say, very concerned about the pending motion, right? And you’re trying to counsel them using actual intelligence. That’s really hard to do. It’s a coin toss at that point in time. I mean, yes, you can go to your arguments, yes, you can go to the law, but we all know that two judges, you give them the exact same briefing, they’re going to come out differently. How do you account for that? How are you so certain about a settlement offer at this point in time that, if you will, you’re going to bet the house on it blindly, just by the by the facts on the law, when we know that those are non predictive again, because different judges can come out differently, meaning that the facts in the law are non predictive. So that’s a great example where I think that you could really use this in a very, very strategic way, especially if you’re on one side of the V and you’re the only one that has been prescient enough to actually purchase our technology, and the other side, for whatever reason, didn’t go down that route. And the same thing for summary judgment, again, knowing you’re going to win or knowing you’re going to lose up. Summary Judgment, of course, has a has a huge impact on settlement, also when to file, and how to file, whether or not you’re going to push for trial and so on and so forth. So certainly, there are any number of. Strategic benefits to having this insight into an intelligence into what’s going to happen next.

Bim Dave 15:06

Yeah, the that’s that’s really interesting. And I think the the cost management piece must be a real differentiator. Because I think if I’m, if I’m a lawyer, leveraging the ability to be able to forecast costs and manage costs are the case, and I can show my client that that would be a real differentiator and give give the client some real value, I guess, from from them leveraging a solution like predictor. Hey,

15:31

John, how do I find out what we’ve invoiced my client? How do I submit my expenses again?

15:39

It’s exciting to have fresh faces at your law firm, but onboarding them takes a lot of time and a lot of energy. When everyone is remote, you’re busy enough, as it is home. 360 has the solution. Just ask temi Helm. Three sixty’s next level chatbot solution for knowledge management. Temi can answer many of your new highest questions for you without distracting you or anyone else at the firm, this means fewer frantic emails, fewer help desk tickets, more time and more focused productivity for everyone at the firm working with termie, you can have those eager new employees up to speed in a snap. They may never need to ask you a question again. Check out helm three sixty.com, forward, slash termie, to see termie in action and find out how it can make onboarding new hires as easy as sending an email. Yes,

Dan Rabinowitz 16:25

and in fact, we have another capability that can be used to great effect, for budgeting and, frankly, even for winning work. So when, when attorneys go in to pitch, you know any client, inevitably, you know they’re, they’re competing, probably against a peer group that are similar to the offerings that that that they have, whether it be in terms of experience, whether it be in terms of, you know, the So, the level of resources they may have. So how do you differentiate yourself? So attorneys go in and they provide an estimate, well, the case is going to cost $15 million to litigate this. Now, of course, attorneys can also say, well, it’s going to cost $15 million because there is uncertainty if it goes to, you know, summary judgment, but if we have to go to trial, it’s going to cost 25 million or if we’re going to go to settlement, settlement inevitably takes longer or shorter than summary judgment, but when attorneys are coming up with those budgets, they really have no idea. At best firms have, you know, like a pricing function which looks at cases in the aggregate and says, Okay, well, you know, we’ve looked at 100 cases, or 500 cases that we have, and we say, on average, summary judgment takes whatever number of hours, and that translates into the ten million cost. But of course, each of those cases might be entirely different and distinct from the one that you have and that you’re pitching for. Could be different judges, it could be different parties, could be any number of those things. So we provide forecasts as they for the entire litigation lifecycle. But those forecasts, again, are not averages of the judge. So we don’t say on average, a judge takes X number of days to get the summary judgment. We’re looking at the case specific now beyond summary judgment, in order to provide real forecasts, you if you will, have to go through each potential outcome, right? So when a lawyer comes in, they can’t say for certain it’s going to be dismissed at the motion to dismiss stage. Or they can’t necessarily say, we’ll, you know, go to summary judgment, or it will go to settlement. So the only way to truly account or forecast timelines, the timelines have to account for all the different potential offerings. And our timelines are based on outcome, right? So they’re outcome based timeline. And we provide, you know, the number of days it will take to arrive at a, you know, a dismissal for summary judgment, versus for settlement, versus for motion to dismiss or trial and so on. And each of those, the attorney can use when they’re pitching for work, not simply saying, Well, I’ve looked at our internal pricing function, but instead, I’ve looked at the specifics of the case with the specifics of the judge, and came to the conclusion that there are two ways we believe we’ve analyzed the case, it’s either going to go to settlement or summary judgment. And if it goes to summary judgment, here’s going to be the cost. And here, if it goes to settlement, here’s going to be the cost. Now aside from estimates and budgets, it also has to go back to the settlement strategy also has, I think, great amount of importance. Because if you could look and say, Well, if we go to summary judgment, it will take an extra year and a half and again to go to the example that plaintiff has provided a settlement offer today. So you might think that your case is pretty strong, so the $15 million or the $25 million that plaintiff has put on the table, they don’t have that strong of a case to justify the 25 million, but you can’t factor in it. If you at least until Predicta, you can’t really factor in and say, Well, okay, we’re going to go to summary judge. What’s that going to cost us? So if we turn down the $25 million offer, and now it takes another year and a half of attorney time, that could literally be in the millions of dollars, and that that should be accounted for when you’re deciding how to respond to a settlement offer or if it’s going to go to trial, the same thing an attorney might say, we have a very, very strong case. I’ve seen a case just like this and so on and so forth, and we could beat these guys at trial. But that’s not without cost, of course, because attorneys are in the service industry, and they’re getting paid by the hour, and inevitably, you know, it’s not just the partner, but who knows how many people are on that and that, that can have a very substantial cost. So this enables attorneys and their clients to make an informed decision as to whether or not this settlement offer makes sense, not simply based on the strength of their case, but also based on the cost of going forward or not going forward?

Bim Dave 20:52

Yeah, no, it’s huge. I mean, we, I was just talking to a firm about producing reports in the so in the UK, we have precedent H reports right the focus on budgeting and budgeting a case, so that the client has visibility of what’s happening, and it’s what you’re describing, is amazing, because really a lot of that is, as you say, a little bit wishy washy in terms of, like, what’s actually going to happen, in terms of outcomes, and really what it becomes the tracking and monitoring solution for capers going out of control from a cost perspective, but actually this provides you with in flight, you know, way ahead of time, right to see what the outcomes are. So I can see that that got a huge, huge benefit and merit. And just as we’re talking about AI and AI solutions, we can’t talk about AI without talking about AI bias and AI bias in legal predictions. And I’m just wondering, how does predictor address those kind of concerns and making sure that the algorithms that you guys are using are fair from that perspective, right?

Dan Rabinowitz 21:53

So it’s a great question. And, you know, this problem, I think, has been really brought to fore, you know, especially when we’re talking, you know, in this new form of AI, with the generative AI and these hallucinations, or false positives, if you will. And it’s incredibly important that, on the one hand, to recognize the value that there’s certain, that there’s certain capabilities that are just beyond us as humans. So there’s, there is, you know, really, you know the humility, if you want to call it, or if you’re looking at it the other way, being mercenary and taking advantage of everything you possibly can. So AI does have tremendous power, and it certainly should be deployed, and it should be part of any attorneys, or, you know, their their clients arsenal. But how do we ensure that we don’t have bias? How do we ensure that we’re not getting the false positives, and one way to do that is by using massive data sets and then testing those data sets independently for accuracy. So what we what we don’t do is simply take a small, if you will, segment of let’s just go with the judge’s biography. We don’t look at five factors. We don’t we don’t look at maybe the five factors that people do think are somehow signals as to what the judge will do. We go so far beyond that, and then we incorporate additional factors that frankly, and we’re constantly incorporating additional factors to the extent that we have access to them, that we don’t necessarily know that they will have an impact during and we let the, if you will, the data tell us whether or not it has an input. So we are agnostic as to what data we’re using, and then what we’re doing is, once we use that data agnostically, of course, then we’re actually doing and we’re actually, we’re actually testing all of that for accuracy. So it’s never in a vacuum. It’s never simply like, you know, we’ve arrived at something, and we believe this to be, you know, very, very meaningful. All of this is tested against 10s of 1000s of motions, or hundreds of 1000s of motions, to ensure that that can be replicated over and over again. And it’s not only in terms of back testing, but of course, we’re also doing forward testing. So we had an original data set that we built some of the models with. But you know, over the years, we’ve acquired additional data, and we want to make sure that we have that consistency, both backward looking as well as forward looking, but it’s really ensuring that the data that you have is large enough that it can account for biases, large enough that it can account for most of the edge cases, and then most significantly, that you’re willing to test and verify that data.

Bim Dave 24:23

Yep, makes sense. So, so I just want to kind of move on to something that you you kind of touched on earlier, I believe, which is about the legal profession being, let’s say, a little traditional, a little bit maybe behind the time, some sometimes. So are you seeing any resistance to getting into a law firm and presenting a solution that is AI driven, such as such as yours, and if so, how are you overcoming that resistance? How are you educating firms that this is the way forward?

Dan Rabinowitz 24:53

Yeah, as as, as you would imagine, there certainly is resistance here, I would say, on. Two levels. First, that correct, that you know, generally, there is a conservative element to most law firms. And then secondarily, or perhaps almost primarily, because we aren’t looking at the facts on the law, it’s really difficult sometimes for attorneys to sort of seed that as it relates to predictions. Right? How is it possible that we Predicta can do a better job of forecasting and predicting what a judge will do when this attorney has spent hundreds of hours looking at the facts in the law? So of course, then we have to educate them and explain that what we’re doing is not litigating a case. We’re coming in and providing transparency where there’s opacity with as it relates to the judge and their particular preferences, predilections and biases. And in order to get around that, the approach is not the law on the facts. The approach is to use well established methods as it relates to predictive analytics, behavioral analytic all of this has been used for over a decade, if not longer, in the commercial space. So a this is trustworthy. This has been tested. This has been deployed. This is used in everyday life. So that’s an approach that we have as it relates to that conservative Street, the other part of the ego, if you will, right, that the that the lawyer can do this already, you know, that’s where we show our accuracy, that we can actually go back and demonstrate over hundreds of cases that we are accurate. You know, I had a very interesting experience where I was working with a plaintiff’s firm, and they were skeptical of, you know, our ability to predict outcomes versus what, you know, what, how they view cases. And of course, this was a very large plane of sperm, and they’re self funding most of these cases, so it’s their dollars on the line. And they provided a list of the 25 you know, recent cases. And sure enough, we predicted 21 out of 25 correctly, whereas out of those 2511 of those were dismissed. So to put it another way, for only 14 of those were successful. So it’s pretty much slightly better than a coin toss. So they’re risking millions, 10s of millions of dollars on a on, you know, again, slightly better than a coin toss. So that really, I think, hammers home the point that, however adept we believe we are at assessing people’s personality, inevitably, we are unable to do that at a degree that AI can. And you know, at the end of the day, part of this comes down to also the idea that if whether or not these attorneys want to step into the AI realm, understanding that if their opponent, like, for example, when I was discussing that class case, you know, the example of class certification, if their opponent has that information and they don’t, they are at such a disadvantage. They don’t know whether or not to push for settlement. They don’t know whether or not to, you know, decline what seems to be a very reasonable settlement offer, but their opponent does. Their opponent has that information. They have that intelligence, and to be without that is just that such a disadvantage. And whether or not you believe, quote, unquote, in the product, or whether or not you really want to get into the AI game, that’s not where you can be any longer. It’s there, and your opponent, or their opponent, will certainly have it at some point in time. And then, moreover, what we did when we designed our technology or the user interface, you know, having practiced as a lawyer and having been at a large firm where there’s, of course, a quote minimum billable hour expectation, which is, you know, never really what you should shoot for when the firm would deploy new technology that they would push out, it was incredibly frustrating, because inevitably, it would be some technology that maybe someone that wasn’t even a lawyer decided it would be very valuable, and you had to spend hours understanding it, and then each time, there was inefficiencies built In when you had to use it. So our user interface is designed to be incredibly simple. It gives you the answers immediately. There’s no additional calculations that the attorney has to make. There’s no parsing of the data. There’s no further filtering. You don’t have to run through five or 10 filters or do 10 iterations. As I said, we do all the work, or we’ve done all the work in building the models and then pulling in information about a case, and then we we provide our clients with what they want, which are forecasts and predictions, not a statistical exercise.

Bim Dave 29:35

Fantastic and and so if I’m a law firm listening to this right now and I’m thinking, okay, but this must be quite complicated to get up and running on. Can you talk us through? What does it take to actually get started on the platform, and how easy is it to adopt for an author?

Dan Rabinowitz 29:52

So as I said, it’s designed to be intuitive. It’s cloud based. It’s you know, sign on. With username and password. And I would say, you know, if you give us a half hour of time, we can probably train everyone in the law firm. And then, in terms of the forecast on predictions, it takes around between, I would say, 10 and 15 seconds to provide those. All of those can be exported into a report for the client. Of course, that’s customizable to you know which aspects of the report that they want to show, but in terms of in terms of usability, in terms of integration, in terms of training, again, I, you know, having practiced as a lawyer, I really, we actually spent a considerable amount of time on specifically ensuring that the user interface was as intuitive as possible, and the learning curve would be as low as possible. Yeah,

Bim Dave 30:45

that’s really good to hear. I think the type the I think the biggest challenge with a lot of products on the market is the time to value right proposition. And I think that sounds it sounds perfect, because ultimately you need something that you can drop in dark see value from day one, which it sounds like you can and then ultimately, then that leads to larger adoption. So really, really pleased to hear that. And so just looking ahead in terms of your view on AI and what role you see it playing in a law firm, maybe not just for litigation, but beyond litigation and litigation prediction, where do you see things moving forward? See things moving forward?

Dan Rabinowitz 31:24

I think there are two classes of AI. One class of AI is the class that creates efficiencies, right? So let’s say contract drafting or any any repeatable or quasi repeatable tasks, and today, some of those seem too complex to necessarily seed them to AI, and I think inevitably, we will cover most of those repeatable tasks. Now, I actually think that that’s a net positive for law firms. If you go back to when e discovery was being pushed out, lawyers were up in arms that, you know, it’s going to take away jobs. It’s gonna, you know, make the process more complicated. This is, you know, this is not good for the practice of law. And what we’ve seen is, is, to the contrary, law firms still exist. Big law firms have gotten bigger. Revenue has gotten, you know, has skyrocketed. So repeatable tests, though, should be, if you will, seed it to technology, to the extent that it makes sense, and to the extent that they’re trustworthy. So I think that we’re going to push into more and more sectors, or more and more tasks within the traditional law firm buckets, but that will enable attorneys to really hone their craft as it relates to what they do, whether that be, you know, litigation and and you know arguments, or whether it be on, you know, acquisitions, rather than spending time doing, you know, reviews or the like, but really understanding some of the complexities and maybe structuring deals in a very different way, just because they have that, that leeway to do it. So certainly I see that in terms of those repeatable tasks, and where we can create efficiencies, we’re certainly going to keep moving in that direction to capture more and more of those tasks. Now, of course, there’s a whole other set of use cases for AI, and that’s similar to what we do, which is entering into a space that, you know, even if you would have 10,000 attorneys working on what the patterns are as it relates to judges, it wouldn’t get you anywhere. So there are going to be other areas of the law where we think we are limited for some reason based on the human capacity. And we’re going to go ahead and crack those boxes open using AI. Now what those are? I can’t say otherwise, I would be starting another company, but I’m certain that there are other use cases beyond simply the efficiencies and areas within the law that we can just enable and empower lawyers to get to spaces that they otherwise could not.

Bim Dave 33:59

So just to be clear, you’re not building a pre crime prediction solution like minor Minority Report, right?

Dan Rabinowitz 34:04

No, no, I am not building a Minority Report. Although I was lucky enough, when I was an intern that where they were shooting part of Minority Report, I was able to watch Tom Cruise walk through, and they actually had transformed the area it was, it was in Washington, DC. They had taken all the signs that they had, you know, placard, you know, through, throughout the walkways, and change them into those screens where, you know, they would play your face and give you that really creepy feeling. But no, we are certainly not going to go to Minority Report. Nor, and I think this is important sometimes for attorneys, nor is our prediction determinative, right? So there’s a difference between a prediction that something will happen a particular way and then determining that that is the way that therefore it will occur. So we are not simply saying that a judge must go this way. We’re simply saying they. Based on the data and based on parsing through the signal from the noise, this is what our predictive models say.

Bim Dave 35:06

That makes total sense, Dan, it’s been fantastic talking to you about this subject. Really, really fascinating. I have a couple of wrap up questions, if I may, the first of which being could if you could borrow doctor, who’s time machine, and go back to Dan at 18 years old. What advice would you

Dan Rabinowitz 35:24

give him? Be more open to opportunities earlier on, and not necessarily go with if you will, what people view as traditional. Traditional is great, but other opportunities should always be at least examined, if not seized upon, and I think that that can, even if you don’t necessarily go in that direction at that point in time, it can inform subsequent decisions and subsequent life experiences.

Bim Dave 35:57

Fantastic advice and your your favorite travel destination so far, that’s a hard one. I

Dan Rabinowitz 36:03

actually travel a lot, and the travel destination I’m going to provide you is probably, I would assume, the only one that you’re going to get on your podcast, and that is my favorite city right now, is in Lithuania. It’s the capital of Lithuania, Vilnius, which happens to be a beautiful city, very small. They still have an old town that’s very picturesque. Yeah, fantastic.

Bim Dave 36:26

That’s definitely, definitely a new one for the podcast. So, yeah, I’ll add that one to the bucket list for sure. Dan, it’s been a pleasure talking to you today. Thank you very much for sparing your time to talk to us. It was really informative. Thank you. We hope you enjoyed today’s episode of the legal Helm, thank you, as always, for listening. If you enjoyed the show, please like and subscribe on iTunes or wherever you get your podcasts. It really helps us out.